Math 360: Supplementary Thoughts

2015-09-05

Andrew Ross

Math Department, Eastern Michigan University

Contents

The Big Picture (Bigger than Statistics)

The

Big Picture (just inside this class).

Procedural

Advice: CI vs HT, and Controls

Sample

project titles from previous years

Chapter

2.3: Comparative Experiments

Controls:

Positive and Negative

Algorithms

for Computing the Mean and the Variance

Chapter 6.7: Estimating Probabilities Empirically

Using Simulation

Chapter

7.5: Binomial and Geometric

Chapter 8: Sampling Distributions

Confidence Intervals Other Than 95%

Common measures of Effect Size

Big-Picture

skeptical discussion on use of p-values and Hypothesis Testing

Costs of Type I vs Type II error

Reading Prompts for a Concept-based quiz on CI and HT

Testing

by Overlapping Confidence Intervals

What

Data Scientists call A/B Testing

2-sample

z-test for proportions but paired (dependent) rather than independent

Preliminaries

I always want to hear your

thoughts on the class so far, so I created an online form for anonymous

feedback. Please use it to let me know what you think (or if you are not

concerned about anonymity, just send an email) :

https://docs.gooREMOVETHISgle.com/spreadsheet/embeddedform?formkey=dDF2U2djSDAtRmFUM0NsTllmOFJ2ZFE6MQ

You can use this throughout the semester.

You should remove the REMOVETHIS; I just put it in there to deter automated

webcrawlers.

Ethics

The American Statistical Association (ASA) has a statement of Ethical Guidelines for Statistical Practice

The Institute for Operations Research and Management Science (INFORMS) has a Certified Analytics Professional program that includes this Code of Ethics.

And here is a slightly more light-hearted code, originally

written for a financial setting:

Emanuel Derman’s Hippocratic Oath of Modeling

• I will remember that I didn’t make the world and that it

doesn’t satisfy my equations.

• Though I will use models boldly to estimate

value, I will not be overly impressed by mathematics.

• I will never sacrifice reality for elegance

without explaining why I have done so. Nor will I give the people who use my

model false

comfort about its accuracy. Instead, I will make

explicit its assumptions and oversights.

• I understand that my work may have enormous

effects on society and the economy, many of them beyond my comprehension.

Some papers to consider:

Ethical Statistics and Statistical Ethics: Making an

Interdisciplinary Module

Critical Values and Transforming Data: Teaching Statistics

with Social Justice

And a report on how web sites return different search

results, prices, or ads based on the apparent race or location of the searcher:http://www.wnyc.org/story/dba2f97dd61e2035fd433a48/?utm_source=/story/128722-prime-number/&utm_medium=treatment&utm_campaign=morelikethis

searching for a traditionally black-sounding

name such as “Trevon Jones” is 25 percent more likely to generate ads

suggesting an arrest record—such as “Trevon Jones Arrested?”—than a search for

a traditionally white-sounding name like “Kristen Sparrow,” according to a January

2013 study by Harvard professor

Latanya Sweeney. Sweeney found this advertising disparity even for names in

which people with the white-sounding name did have a criminal record and people

with the black-sounding name did not have a criminal record.

And later in the report,

Our tests of the Staples website showed that

areas with higher average income were more likely to receive discounted prices

than lower-income areas.

The Big Picture

(Bigger than Statistics)

Statistics is its own field, of course, but it is related to many others. People now talk about Analytics, which is often broken into

· Descriptive Analytics: what did happen? (EMU Math 360)

· Predictive Analytics: what will happen? (EMU Math 360, EMU Math 419W)

· Prescriptive Analytics: what’s the optimal thing to do? (EMU Math 319, EMU Math 560)

Some people summarize this as Describe, Anticipate, Decide = D.A.D.

Of course other EMU statistics courses relate to Descriptive and Predictive analytics; I’ve only listed the ones I teach.

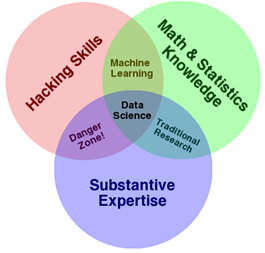

“Data Science” is another hot term these days. Some say it’s a combination of Statistics/Math/Operations Research, Computer Science, and Substantive Expertise:

http://drewconway.com/zia/2013/3/26/the-data-science-venn-diagram

The book “Doing Data Science”, page 42, says "Now the key here that makes data science special and distinct from statistics is that this data product then gets incorporated back into the real world, and users interact with that product, and that generates more data, which creates a feedback loop."

I disagree with that on two levels: first, plenty of people do what they’d call data science that doesn’t interact with users/create a feedback loop, and second, the idea of interaction or creating a feedback loop could still be called statistics, or Advanced Analytics/prescriptive analytics.

“Big Data” is also a hot topic these days. You could say it is any data set that is too big to fit onto one computer, and must be split across multiple computers. For example, Facebook profiles and clickstreams would constitute big data; some physics experiments also generate big data (100 TB per day!) Big data is often characterized by the “3 V’s”: Volume, Velocity, and Variety. Volume means how much data there is, Velocity means how fast it comes in, and Variety is the mix of numbers, text, sounds, and images. We won’t be dealing with Big Data in this class, but ask me if you want to know more about it.

The Big Picture (just inside this class)

We will use Excel a lot, even though there are well-documented problems with it for statistics; for example,

http://panko.shidler.hawaii.edu/SSR/Mypapers/whatknow.htm

http://www.analyticbridge.com/profiles/blogs/comprehensive-list-of-excel-errors-inaccuracies-and-use-of-wrong-

Concept maps of Statistics

http://derekbruff.org/blogs/math216/?p=675

http://bioquest.org/numberscount/statistics-concept-map/

http://iase-web.org/documents/papers/icots6/5a2_bulm.pdf

http://cmapskm.ihmc.us/rid=1052458963987_1845442706_8642/Descriptive%20statistics.cmap

http://cmapskm.ihmc.us/servlet/SBReadResourceServlet?rid=1052458963987_97837233_8644&partName=htmltext

http://www.sagepub.com/bjohnsonstudy/maps/index.htm

Eight Big Ideas

1. Data beat anecdotes

2. Association is not causation

3. The importance of study design

4. The omnipresence of variation

5. Conclusions are uncertain.

6. Observation versus experiment

7. Beware the lurking variable [confounding]

8. Is this the right question?

http://www.statlit.org/pdf/2013-Schield-ASA-1up.pdf

What Your Future Doctor Should Know About Statistics: Must-Include

Topics for Introductory Undergraduate Biostatistics

Brigitte Baldi & Jessica Utts

Pages: 231-240

DOI: 10.1080/00031305.2015.1048903

What do Future Senators, Scientists, Social Workers, and Sales Clerks Need to Learn from Your Statistics Class? http://www.ics.uci.edu/~jutts/APTalk.pdf

1. Observational studies, confounding, causation 2. The problem of multiple testing 3. Sample size and statistical significance 4. Why many studies fail to replicate 5. Does decreasing risk actually increase risk? 6. Personalized risk 7. Poor intuition about probability/expected value 8. The prevalence of coincidences 9. Surveys and polls – good and not so good 10. Average versus normal

Math Is Music; Statistics Is Literature

Richard D. De Veaux, Williams College, and Paul F. Velleman, Cornell University

S even unnatural acts of statistical thinking:

➊ Think critically. Challenge the

data’s credentials; look for biases and lurking variables.

➋ Be skeptical. Question authority

and the current theory. (Well, okay, sophomores do find this natural.)

➌ Think about variation, rather than

about center.

➍ Focus on what we don’t know. For

example, a confidence interval exhibits how much we don’t know about the

parameter.

➎ Perfect the process. Our best

conclusion is often a refined question, but that means a student can’t memorize

the ‘answer.’

➏ Think about conditional

probabilities and rare events. Humans just don’t do this well. Ask any gambler.

But, without this, the student can’t understand a p-value

7 Embrace vague concepts. Symmetry, center, outlier, linear … the list of concepts fundamental to statistics but left without firm definitions is quite long. What diligent student wanting to learn the ‘right answer’ wouldn’t be dismayed?

Statistics Habits of Mind http://info.mooc-ed.org.s3.amazonaws.com/tsdi1/Unit%202/Essentials/Habitsofmind.pdf

● Always consider the context of data ● Ensure the best measure of an attribute of interest ● Anticipate, look for, and describe variation ● Attend to sampling issues ● Embrace uncertainty, but build confidence in interpretations ● Use several visual and numerical representations to make sense of data ● Be a skeptic throughout an investigation

Proposal/Project Guidance

For project proposals, a few

things to remember:

* I encourage team projects, but solo projects

are allowed as well.

* Team sizes are limited to 2

people (arranged with whomever you want).

* if anyone is looking for a partner, please let me know and I will do my best to play eHarmony.

* There is no competition for

project topics. Multiple people or teams may do the same project.

* I STRONGLY ENCOURAGE you to chat with me about

project ideas well before the proposal deadline, either in person or by email.

* After the chatting is done, feel free to email

me a draft of a proposal (and perhaps a data set) for some informal feedback.

* See below some sample project titles from last

year's Math 360 class.

Proposals

Proposals will generally be 1 to 2 pages, and

will contain:

* title of project

* author(s) names

* a description of the problem you are facing

* a description of the available data or data

collection plans (incl. a copy of the data if it’s already available, perhaps

as a separate file)

* a description of the proposed analysis

* literature search? for many projects, no

literature search is needed. Others may benefit from a literature search, and

may get bonus points for doing one. Giving proper credit to your sources of

information or ideas is always required.

* data? If you already have the data, include a

spreadsheet of it as a 2nd file when you upload the presentation

If your project idea depends on getting a data

set from your boss at work, you need to have the data set in hand by the time

of the proposal. I've had a few projects go bad when a boss doesn't come

through with promised data.

A proposal does not lock you in to a topic or

analysis method. If your project is not working out, contact me immediately and

together we will find a new project topic.

Procedural Advice: CI vs HT, and Controls

A general tip as you're doing your projects: doing confidence intervals is almost always better than doing a hypothesis test, because a CI can be converted into an HT very easily in your head (did the CI include zero? for example), but knowing the results of an HT doesn't give you much info about the related CI. There are some projects where a CI isn't applicable, though--often those related to Chapter 12 (chi-squared tests). The one nice thing about HT, though, is that you get a P-value, while the CI just lets you know that it was < 0.05 or whatever value you used for a CI.

It's important to create

artificial data sets similar to what your real data set is. That way, when you

do your processing, you can tell if you are getting what you expect to

get--it's a way of debugging. You start by copying the file with your original data

set, then in that copy, replacing the original data with artificial data. Then

you do your analysis on the artificial data. Once you've done it for artificial

data, you should be able to save another

copy of that file, then paste your real data in where the artificial data is,

and have all the calculations automatically update. This is vastly better than

trying to re-create the formulas in a new sheet, since that could introduce new

bugs.

To generate a Standard Normal in excel, use

=norminv(rand(),0,1)

To generate a non-standard normal with mean 5 and std.dev. 3, use

=norminv(rand(),0,1)*3 + 5

Another big advantage of creating artificial

data is then you can compute how much your output measurements change just due

to random chance, by running a whole bunch of random trials.

Someone asked me if the

random number generator in Excel is seedable (that is, can it be set to start

at the same sequence over and over). There's no interface for doing that, but I

researched the algorithm that the random number generator uses, and I've

implemented it in simple formulas in a posted spreadsheet. You may ignore this

if you want.

Another key component of some

projects is the idea of Cross-Validation. Instead of fitting models to the

entire data set, you pick a portion of it called the “Training” set and fit the

models to that. Then you use those fitted models to make predictions for the

rest of the data, called the “Test” set, to see which model does the best.

Actually, if you then want to quantify the prediction errors you might expect

to see, you need a 3rd portion of the data set: you fit the winning

model to the training & test set, then make predictions for that 3rd

portion, and measure the prediction error.

Doing Data Science says:

In-Sample,

Out-of-Sample, and Causality

We need to establish a strict concept of in-sample and out-of-sample data. Note

the out-of-sample data is not meant as testing data—that all happens inside in-sample data. Rather, out-of-sample data is

meant to be the data you use after finalizing your model so that you have

some idea how the model will perform in production. We should even restrict the

number of times one does out-of-sample analysis on a given dataset because, like it or not, we learn stuff about that data every

time, and we will subconsciously overfit to it even in different contexts, with

different models.

Next, we need to be careful to always perform causal modeling (note this

differs from what statisticians mean by causality). Namely, never use

information in the future to predict something now. Or, put differently, we

only use information from the past up and to the present

moment to predict the future. This is incredibly important in financial modeling. Note it’s

not enough to use data about the present if it isn’t actually available and

accessible at the present moment. So this means we have to be very careful with

timestamps of availability as well as timestamps of reference. This is huge

when we’re talking about lagged government data.

Final Report

Your final report should be a roughly 5-to-10-page technical

report (a Word file, usually). I don’t count pages, though, so don’t worry

about the exact length. Please use the

HomeHealthCare.doc file that I will email out as a template (remove their

content, type in your own content).

Please upload both your

report file and your Excel file at the same time. But, your report should have

copies of any relevant figures; don't just say "see the Excel file".

If you are part of a team project, _each_ person

should upload a copy of the presentation and report.

Presentations

For your final presentation, you have 2 options:

· A 5-minute Powerpoint-style presentation that you stand up and give to the class (roughly 5 slides), or

· A poster presentation, which often consists of about 12 Powerpoint slides, printed out on paper and taped to the wall of the classroom (don’t buy/use posterboard).

Each person or team of 2 may decide whether they want to do a poster or oral presentation. Either way, presentation materials should be uploaded to a dropbox inside EMU-online.

Please do not feel obligated

to dress up for our presentation day in Math 360. Anyone who does dress up will

be a few standard deviations from the mean, as statisticians say. Either way,

it will not affect your grade at all.

However, it is important to present in a professional way (aside from how you are dressed). If I write a letter of recommendation for you, I want to be able to say how polished your presentation was—not just your slides, but your manner of speaking. This can be especially important for future teachers. In a letter of recommendation I would hope I could say “While I’ve never observed ____ as they teach an actual class, their final project presentation in Math 360 convinces me that they have the presentation skills to be a great teacher.”

National Competition

I will recommend that some people submit their

work to the Undergraduate CLASS Project Competition (USCLAP)

http://homepages.dordt.edu/ntintle/usproc/USCLAP.htm

The writeup for that has the following page

limits (all in 11-point Arial, single-spaced, 1-inch margins):

1 page for title and abstract

<=3 pages for report

1 page for bibliography, if any (optional)

<=5 pages for appendices

So you might want to format

your paper that way if you’re thinking of entering the contest.

Note that if you are using

data from human subjects (or animals!) you will need to apply for permission

from EMU’s Institutional Review Board (IRB) to use your data in the USCLAP contest.

I can help you with this, but we need to do it early in the semester. If you

aren’t hoping to submit to the USCLAP, then IRB approval is usually not

required.

The judging criteria for that contest will be

the basis of the grading system for projects:

1. Description of the data source (15%)

2. Appropriateness and correctness of

data analysis (40%)

3. Appropriateness and correctness of

conclusions and discussion (20%)

4. Overall clarity and presentation (15%)

5. Originality and interestingness of the

study (10%)

NOTE: All essential materials addressing these

criteria must be in the report, not confined to the spreadsheet file.

You can see the guidelines I give to my other

project-based classes (Math 319, Math 419, Math 560) at this link:

http://people.emich.edu/aross15/project-guides/guides.html

though as you can see from the above, the

requirements for Math 360 are a little different because of the statistical

focus.

Also see:

Heiberger, Richard M., Naomi B. Robbins, and Jüergen

Symanzik. 2014. "Statistical Graphics Recommendations for the ASA/NCTM

Annual Poster Competition and Project Competition", Proc. of the Joint

Statistical Meetings, American Statistical Association, Arlington, VA.

Symanzik, Jüergen; Naomi B. Robbins, Richard M.

Heiberger. (2014). "Observations from the Winners of the 2013

Statistics Poster Competition --- Praise and Future Improvements." The

Statistics Teacher Network, 83, 2-5.

Sample project titles from previous years

Baseball player builds and home runs

Tennis serve accuracy

Noll-Scully simulation of sports rankings

Spring Training vs Regular Season

Anchoring effect

Finding a Piecewise Linear Breakpoint in Chemistry

data

Music participation and GPA

double-SIDS dependencies

Swimming times

Salary vs. Results in NCAA Tournament

NBA scores

Barbie Bungee Challenge

Incumbency advantage in elections

Comparing Distinct Audio Points in Classical and

Rock Music

Barbie Bungee Challenge

Airbags, seat belts, bike helmets

Spring Training vs Regular Season

Golden Ratio in Art

Gender differences in SAT scores

Home health care data

double-SIDS dependencies

GEAR-UP survey data

Normal distributions on Wall Street?

NBA scores

Naive Bayesian spam filtering

Honors college GPAs

Predicting Course Grades from Mid-Semester

Grades

Anchoring effect

Salary vs. Results in NCAA Tournament

Incumbency advantage in elections

An Analysis of Correlations between Event Scores in Gymnastics Using Linear Regression

Accounting Fraud and Benford's Law

Appointment-Based Queueing and Kingman's Approximation

Are Consumers Getting all the Coconut Chocolaty Goodness They’re Paying For?

Are regular M&Ms more variable in weight than Peanut M&Ms?

Barbie Bungee Experiment

Breaking Eggs in Minecraft

Calculus-Based Probability

Comparing the Efficiency of Introductory Sorting Algorithms

Distribution of File Sizes

Do students who score better on a test’s story problems score better on the test as a whole?

Do studying Habits affect your interest in math

Do young adults under 18 and 18 and older have the same completion rate of the 3-shot regimen for Gardasil?

Does age effect half-marathon completion time

Patterns in bulk discounts

Does having high payrolls mean you will win more Major League Baseball games?

Gardasil 3-shot vaccine completion, average number of shots

Getting Hot at the Right Time: A statistical analysis of variable relative strength in the NHL

Home Field Advantage in MLB, NFL, and NBA

How random are Michigan Club Keno and Java random numbers?

Ice Cream Sales and Temperature

Is there relationship between the length of songs at the #1 spot on the Billboard Hot 100 and their respective week at #1 in time?

Lunch vs Dinner Sales at Domino's

Math Lab demand data vs Section Enrollment by Hour

Modeling School of Choice Data in Lenawee County

Pharmacy prescription pick-up times

Piecewise-Linear Regression on Concentration / Conductivity Data

Proportion & Probability of 2-Neighborly Polytopes with m-Vertices in d-Dimensions

Ranking Types of Math Questions (Algebra-based)

Scoring Trends and Home Court Advantage in Men’s College Basketball

Skip Zone on the Sidewalk

Spaghetti Bridge and Pennies

What affects a pendulum’s behavior

Project Ideas

While these are shown in various categories, each project idea is open to anyone in any major.

Miscellaneous

Are stock prices (or percent returns) normally distributed? See http://bestcase.wordpress.com/2010/08/01/outliers-in-the-nyt-reflections-on-normality/

Various questions on where the Daily Double in Jeopardy is located (ask me for more thoughts)

Song database: http://musicbrainz.org/doc/MusicBrainz_Database

correlation between LSAT, GPA, admission, and salary; ask me to dig the data out of my email if needed

Fermat's last theorem histogram: how close can the equation come to being true?

* Mega M&Ms claim they have

"3x the chocolate per piece"

* What is the speed of the wave as dominoes

tumble in a row, as a function of the spacing between them? Is it a linear

function?

* If people close their eyes and balance on one

foot, how long can they stay up? Does it depend on which foot they stand on,

vs. their handedness?

* If you do some moderate exercise then track

your pulse after you stop, does it go back to your resting pulse in a linear

fashion? exponential? power?

* psychology/occupational therapy: learning

curves,

http://web5.uottawa.ca/www5/dcousineau/home/Research/Talks/2001-06_BBCS/2001-06_BBCS-learning.pdf

* Does the "close door" button on elevators

actually do anything?

* which packs more efficiently, plain M&Ms

or spheres?

* burning birthday candles,

http://www.algebralab.org/activities/activity.aspx?file=Science_BurningCandles.xml

http://www2.drury.edu/fred/activities/candles/candle.html

* can you do anything with the loud hand driers

in the Pray-Harrold bathrooms?

* as you add layers of tape (or post-it notes?) over

the camera of an iPhone/iPad/Android, how does the total light transmitted to

the camera vary?

* How long a toaster takes vs. the control

setting (or, how dark the toast gets?)

* how fast does water flow out of

an I.V. (intravenous fluid) bag, based on how high the bag is to where the

outflow is?

Economics

https://en.wikipedia.org/wiki/Cliometrics

Cliometrics is the study of history and economics using mathematical methods.

* business cycle durations and/or models; see http://onlinelibrary.wiley.com/doi/10.3982/ECTA8050/abstract?elq_mid=4373&elq_cid=1272383

* How much seasonal effect is there in things like inflation,

stock prices, stock indices, etc.?

- try this: separate data into months, do

ANOVA to see if there's a difference between months (or use quarters)

- should you operate on the actual value,

or the % change, or Ln( new/old ) ?

- or try triple-exponential smoothing

(also called Holt-Winters) and see what seasonal effects it finds

- also use artificial data that has no

seasonality, and artificial data that definitely does have seasonality

* How much correlation is there from day to day

(or week to week, or month to month, or year to year, or hour to hour, or

minute to minute, etc.) in inflation, stock prices, stock indices, etc?

- should you operate on the actual value,

or the % change, or Ln( new/old ) ?

* Do stock prices, etc. have a Normal distribution?

- should you operate on the actual value,

or the % change, or Ln( new/old ) ?

- if the data is correlated from day to

day, consider taking data from separate days rather than adjacent days.

- use the correlation coefficient on a Q-Q

normal probability plot as a way to do a hypothesis test (see that part of the

textbook for details--chapter 7)

* explore Hyperbolic Discounting: the way actual

people might (or might not) treat the value of money in future time periods. Do

a survey and ask how much money people would trade now for a specified payoff

in the future, then fit a function to it. Also, there is a hypothesis that

one's native language can have an effect (or is at least correlated with) on

how one views the value of future money, so consider separating results by the

person's native language.

Various data is at

http://www.econlib.org/library/sourcesUS.html

Physics

toy helicopter lift force vs rotation speed http://sphsdevilphysics.weebly.com/uploads/5/0/7/1/5071691/example02_en.pdf

GPS accuracy:

- by time of day

- by weather/day-to-day

- within span of a few seconds or minutes

- from device to device

Tablet/Smartphone Accelerometer Data:

- accuracy at 500 Hz vs 50 Hz vs 5 Hz

- correlation between devices

Mars Craters data set,

craters.sjrdesign.net

Make your own crater data set

with a bucket of sand and a heavy marble?

cepheid variable stars; ask

me to dig data out of my email box

Asteroid size distribution: can get data from http://www.asterank.com http://www.minorplanetcenter.net/iau/lists/Dangerous.html

http://earn.dlr.de/nea/table1_new.html

http://earn.dlr.de/nea/

http://www.space.com/30197-saturn-rings-math-rule.html?cmpid=514630_20150815_50643176&adbid=10153004255456466&adbpl=fb&adbpr=17610706465 and http://www.pnas.org/content/112/31/9536

Size distribution of particles in Saturn’s rings

from aggregation and fragmentation

Nikolai Brilliantov , P. L. Krapivsky , Anna

Bodrova, Frank Spahn, Hisao Hayakawa, Vladimir Stadnichuk, and Jürgen Schmidt

space weather, Coronal Mass

Ejections CME (ask me to dig up some data on this out of my emails)

There’s a new ASA section on

astrostatistics, described in AMstat News—see what they do?

http://en.wikipedia.org/wiki/Proton_decay In an experiment involving a series of particle collisions,

the amount of generated matter was approximately 1% larger than the amount of

generated antimatter. The reason for this discrepancy is yet unknown.[2]

V.M. Abazov et al. (2010). "Evidence

for an anomalous like-sign dimuon charge asymmetry". arXiv:1005.2757. http://arxiv.org/abs/1005.2757

Future Teachers

Tennessee STAR study on small

class sizes

problems with estimating from pie charts

parents probability of pulling kids from public

schools (survey)

Regression through the origin: when?

Instead of a regular project, work on getting

the Data Analysis electronic badge?

anchoring effect

Barbie Bungee: make a bungee-cord out of rubber bands, and

send a Barbie (or similar toy) plunging toward the floor. Try it with a few

different lengths of cord, record how far she plunges, then forecast how many

rubber bands would be needed for a 12-foot drop. You can find more info online,

of course.

Spaghetti Bridges: make a simple bridge of straight spaghetti (not glued into a

truss), see how much weight it can hold. Repeat with wider spans and/or more

strands per bridge. You can find more info online. One reference is

“Slope-Intercept Form—Beam Strength” from Exploring Algebra 1 with TI-Nspire,

2009, Key Curriculum Press.

Pullback Cars

* How far does a supersoaker shoot, based on how many pumps

you give it?

* How far does a supersoaker shoot, as a function of time as you hold down the

trigger?

Statistics Majors

Here are some ideas about the

mechanics of statistics:

LiveRegression formulas

Confidence interval on s_e for linear regression

Partial Correlation in multivariate analysis

Simulate a thought experiment on publication bias

advanced work on causality: http://magazine.amstat.org/blog/2013/08/01/causality-in-stat-edu/

Judea Pearl work on causality

Bayesian networks

Granger causality

Computer Science

Consider reading “Statistical Modeling: The Two

Cultures”, which talks about the divide between traditional statistical methods

and “machine learning” methods:

https://projecteuclid.org/download/pdf_1/euclid.ss/1009213726

Machine Learning problems:

logistic regression, SVM, etc.

Cross-Validation: training

and test data sets

Queueing simulation in Python

(using existing code from Prof. Ross)chi

distribution of file sizes

- on a hard drive (correlated to time of

creation, modification, or access?)

- on a web server

- as requested from a web server

distribution of packet sizes, and correlation

from one to the next?

distribution of time gap between packets, and correlation from one to the next?

spam filtering; try the Enron email database at http://www.cs.cmu.ed/~enron

durations of jobs on the CPU

memory sizes of jobs

paging policies

Network round-trip times for

pings

Sleep vs Cron repetitive

wakings

look into what gets presented at ACM SIGMETRICS

http://sciencehackday.pbworks.com/w/page/24500475/Datasets

http://aws.amazon.com/datasets?_encoding=UTF8&jiveRedirect=1

http://www.cs.cmu.edu/~awm/15781/project/data.html

Health care

Medicare Home Health Compare

Gardasil data set:

http://www.amstat.org/publications/jse/v19n1/gardasil.txt

SEER cancer data set,

http://seer.cancer.gov/

(need to submit application to use it)

National Longitudinal Study

of Adolescent Health, via IPCSR/umich (easiest to use wave 1)

Health Evaluation and Linkage

to Primary Care (HELP), data set HELPrct from Project Mosaic

painkiller prescription and overdose rates by state; I have some of the data saved in an email

Sports

Pick your favorite sport and

ask a statistical question about it. Some examples:

* predicting player performance from

previous years (helpful first step to choosing a fantasy team)

* quantifying home-field advantage

* (harder) quantifying time-zone advantage

* bracketology

How consistent is a participant's

performance (#fish? weight? rank? z-score?) from one event in the tour to the

next? Compare to other individual-performance sports? Here are links for 3

tournaments in 2014:

http://www.flwoutdoors.com/bassfishing/flw/tournament/2014/7128/kentucky-lake-paris-landing-professional-results/?dpl=1&all=1&sr=1&rpp=50&&sort=pl

http://www.flwoutdoors.com/bassfishing/afs/tournament/2014/7134/potomac-river-professional-results/?dpl=1&all=1&sr=1&rpp=50&&sort=pl

http://www.flwoutdoors.com/bassfishing/afs/tournament/2014/7135/lake-champlain-professional-results/?dpl=1&all=1&sr=1&rpp=50&&sort=pl

How about the Hot Hand?

http://regressing.deadspin.com/the-myth-of-the-myth-of-the-hot-hand-1588112937

http://www.nytimes.com/2014/06/26/science/the-science-of-hot-hand.html?referrer=&_r=2

http://andrewgelman.com/2014/03/11/myth-myth-myth-hot-hand/

Here is a stats textbook that has a sports focus, rather

than just doing sports-statistics, but it might still be interesting: http://www.sportsci.org/resource/stats/index.html

Related books and

Websites

Statistical

Applets:http://sapphire.indstate.edu/~stat-attic/index.php?topic_id=HT

GeoGebra can do some statistics: http://web.geogebra.org/beta/

use

the three-bar button in the upper right

choose Perspectives

choose Spreadsheet&Graphics

click on the normal curve with an area under it

Play with either the Distribution or the

Statistics tab

Statistics can do Z Test of a Mean, T Test

difference of means, etc.

Excel 2010 for educational

and psychological statistics : a guide to solving practical problems / Thomas

Quirk.

Excel 2010 for biological and life sciences

statistics : a guide to solving practical problems / by Thomas J. Quirk, Meghan

Quirk, Howard Horton.

Converting Data into Evidence

A Statistics Primer for the Medical Practitioner

DeMaris, Alfred, Selman, Steven H.

Statistics with Excel website:

http://www.real-statistics.com/

Little Handbook of Statistical Practice

http://www.jerrydallal.com/LHSP/LHSP.HTM

http://www.stat.berkeley.edu/~aldous/Real-World/draft_book.pdf

On Chance and Unpredictability: 13/20 lectures

on the links between mathematical probability and the real world. David Aldous,

January 2012

Statistical

Reasoning in Sports, by Tabor and Franklin http://bcs.whfreeman.com/sris/

http://content.bfwpub.com/webroot_pubcontent/Content/BCS_5/SRIS/Student/Appendices/AppB_Using_Excel.pdf

Statistics: A Guide to the

Unknown

Forty Studies that Changed Psychology:

Exploration into the History of Psychological Research

"Making Sense of

Data" volumes 1,2,3, by Glenn J. Myatt; EMU library has an electronic

subscription

Doing Data Science: Straight

Talk from the Frontline, By Cathy O'Neil, Rachel Schutt; Publisher: O'Reilly

Media

Chapter 1

We will use this

link for the Car Insurance activity:

https://docs.gooREMOVETHISgle.com/spreadsheet/embeddedform?formkey=dEV4M2hZanFqRlByRVRadWJiZTFQd3c6MQ

and then later we will use this link for the

Data Types activity:

https://docs.gooREMOVETHISgle.com/spreadsheet/embeddedform?formkey=dERFS3VncWZHbUZMNnVVcnJwcXltYlE6MA

Some additional reading is included below.

To prepare for our next class, we will use the following PDF file

on Random Rectangles:

http://www.rtmsd.org/cms/lib/PA01000204/Centricity/Domain/197/RandomRectangles.PDF

and you should enter your answers here before

class starts:

https://docs.gooREMOVETHISgle.com/spreadsheet/embeddedform?formkey=dHN0SlctaDFNaTY2QWctS3RraHJZbWc6MQ

Data

Types

Sometimes we code binary categorical variables (like gender) as 0 or 1;

that’s called Dummy coding. We can also code them as -1 vs +1; that’s called Effect

coding: http://methodology.psu.edu/node/266

Here is some reading on the standard

classifications for Data Types (nominal, ordinal, interval, ratio):

http://www.upa.pdx.edu/IOA/newsom/pa551/lecture1.htm

http://en.wikipedia.org/wiki/Level_of_measurement

and an opposing viewpoint:

www.cs.uic.edu/~wilkinson/Publications/stevens.pdf

which cites, among other possible systems,

Mosteller and Tukey (1977 Chapter 5):

* Names

* Grades (ordered labels such as Freshman,

Sophomore, Junior, Senior)

* Ranks (starting from 1, which may represent

either the largest or smallest)

* Counted fractions (bounded by zero and one.

These include percentages, for example.)

* Counts (non-negative integers)

* Amounts (non-negative real numbers)

* Balances (unbounded, positive or negative

values).

Doing Data

Science, page 23, suggests:

• Traditional: numerical, categorical, or binary

• Text: emails, tweets, New York Times articles

• Records: user-level data, timestamped event data, json-formatted log

files

• Geo-based location data

• Network

• Sensor data

• Images

Also see http://stats.stackexchange.com/questions/539/does-it-ever-make-sense-to-treat-categorical-data-as-continuous

For future teachers: I

was amazed to see in my daughter's 3rd grade homework a link with our

Categorical/Quantitative, Discrete/Continuous discussion:

This homework sheet talks about Count, Measure, Position, and Label:

http://www.eduplace.com/math/mw/practice/3/homework/1_1.pdf

This one is amazingly similar to our activity where we talked about

Nominal, Ordinal, Interval, Ratio for our start-of-semester-survey:

http://www.eduplace.com/math/mw/practice/3/enrichment/1_1.pdf

I'm not sure if it's in all such curricula--the book they're using is by

Houghton Mifflin.

Dotplots

Remember that dotplots can

tell us:

* S: the Shape of the

distribution: (concentrated at an endpoint? Or in the middle?

* O: any Outliers or other unusual features like

gaps

* C: where the data is Centered

* S: how Spread the data is (or as some call it,

Variability)

so if you're writing sentences describing a

dotplot, you should write at least one sentence for each of those bullet

points. Remember the acronym SOCS. It’s important to do them in that order,

too, because shape and outliers often influence our choice of how to measure

center and spread.

For example, for a sibling-count dotplot we did

one year in class:

The data is concentrated near

the low end.

There are no unexpected gaps or outliers.

The center of the data is around 3.

The data is spread from 1 to 9.

A more subtle concept is

“Inlier”, like an outlier in the sense that it might be a false reading, but it

doesn’t fall outside the main data range. A common example is for missing data

to accidentally be represented by a zero, when small numbers like zero or 1 or

2 are in fact perfectly plausible real data values.

Lexical ambiguity: making a case

against spread

Jennifer J. Kaplan1, Neal T. Rogness2 and Diane

G. Fisher3

Article first published online: 28

APR 2011

DOI: 10.1111/j.1467-9639.2011.00477.x

http://onlinelibrary.wiley.com/doi/10.1111/j.1467-9639.2011.00477.x/full

That article says that “variability” is a better word.

Population and

Sample

First, let’s note that in

statistics, a Sample almost always means more than one data value. If you poll

25 people for a project, that is a single Sample, not 25 samples. This is in contrast

to how scientists often think of samples: a blood sample, or a sample from a

lake or river, often makes us think of just one container of blood or water.

Doing Data Science, page 21,

asks:

But,

wait! In the age of Big Data, where

we can record all users’ actions all the time, don’t we observe everything? Is

there really still this notion of population and sample? If we had all the

email in the first place, why would we need to take a sample?

And on page 25:

The

way the article frames this is by claiming that the new approach of Big Data is

letting “N=ALL.”

Can N=ALL?

Here’s the thing: it’s pretty much never all. And we are very often missing the

very things we should care about most.

Chapter 2

Example of Sampling Frames: 3 different lists of all doctors in a country

optimal

assignment instead of random assignment:

http://pubsonline.informs.org/doi/pdf/10.1287/opre.2015.1361

Dimitris Bertsimas, Mac Johnson, Nathan Kallus

(2015) The Power of Optimization Over Randomization in Designing

Experiments Involving Small Samples. Operations

Research 63(4):868-876. http://dx.doi.org/10.1287/opre.2015.1361

Confounding

Confounding vs Lurking:http://www.virmanimath.com/start-page-2012-2013/ap-stats-2012-2013/chapter-2/apstatonlineclass/confounding-and-lurking-variables

Example of confounding: Stereotypically, old people are thought of as not very good with new technology. Is that because they have lived a large number of years, or because they were born during a particular decade or two? There’s no way to disentangle those two things.

Another example: An

exhibit at the Wagner farm in Glenview, IL has 3 rope/pulley systems, each

trying to lift an equal weight. One is a simple pulley; the next is compound

(down and up), the 3rd is even more compound (down/up/down). The ropes used are

also slightly different: the most compound one uses a thinner rope. The most

compound one should be the easiest to pull. [it's not, due to a lack of

lubrication and some bent axles on the pulleys).

My daughter tries all 3 and decided that the diameter of the rope is what makes

things easier or harder to lift.

Another example: Examining

Variation in Recombination Levels in the Human Female: A Test of the

Production-Line Hypothesis; Ross Rowsey, Jennifer Gruhn, Karl W. Broman,

Patricia A. Hunt, Terry Hassoldemail, http://dx.doi.org/10.1016/j.ajhg.2014.06.008

and

With

Gene Disorders, The Mother's Age Matters, Not The Egg's

Also, I heard this somewhere: Women who have more kids tend to have started at an earlier age than women that have fewer kids. So if you're looking for the relationship between that and breast cancer, which one is the main effect?

Imagine dropping a marble into a

bucket of sand and measuring the diameter of the crater.

If you change the diameter of the impactor,

you're also changing the weight (or vice versa), unless you take very great

care to find marbles/balls that change density or become hollow in just the

right way.

The table would look like this:

Diameter Weight DropHeight

CraterSize

0.5cm 2grams

25cm 5cm

0.5cm 2grams

25cm 6cm

0.7cm 3grams

25cm 7.1cm

0.7cm 3grams

25cm 7.3cm

You could control weight separately from

impactor diameter by using a non-sphere impactor, like a stack of pennies or

nickels, or AA batteries. But then you'd have to be careful to control its

orientation at impact--maybe have it slide down a V-shaped near-vertical

channel, or suspended from a string (balanced perfectly vertically) and very

still, then cut the string.

Activity

Quick activity: name that

sampling method

a. Roll a die to pick a row in class, then ask

each student in that row; do it twice

b. Pick a student and then every 5th student

after that

c. Ask one student from each row

d. pick some students, say "you guys look

like typical students"

e. throw an object, see who it hits

f. Number the students, etc.

In _______ sampling, ALL groups (strata?

clusters?) are used, and SOME individuals in each are sampled.

In _______ sampling, SOME groups (strata?

clusters?) are used, and ALL individuals in each are sampled.

If you’ve done Stratified sampling, how do you combine your strata results into whole-sample results? Ask me for a photocopy from Applied Statistics for Engineers and Scientists, 2nd Edition, by Devore and Farnum.

Kate Crawford’s talk, Algorithmic Illusions: Hidden Biases of Big Data

Dotplots of Random Rectangles results, look for

bias

Bias in cancer screening: Crunching Numbers: What Cancer Screening Statistics Really Tell Us, by Sharon Reynolds, http://www.cancer.gov/ncicancerbulletin/112712/page4

Bias due to question ordering: http://textbookequity.org/oct/Textbooks/Lippman_mathinsociety.pdf

page 137: A psychology researcher provides an example:

“My favorite finding is this: we did a study where we asked students, 'How

satisfied are you with your life? How often do you have a date?' The two

answers were not statistically related - you would conclude that there is no

relationship between dating frequency and life satisfaction. But when we

reversed the order and asked, 'How often do you have a date? How satisfied

are you with your life?' the statistical relationship was a strong one.

You would now conclude that there is nothing as important in a student's

life as dating frequency.”

Swartz,Norbert.

http://www.umich.edu/~newsinfo/MT/01/Fal01/mt6f01.html. Retrieved 3/31/2009

Bias in psychology studies: most undergrad students who

volunteer as subjects are WEIRD (or WIRED): Western,

Educated, Industrialized, Rich, and Democratic

http://journals.cambridge.org/action/displayAbstract?fromPage=online&aid=7825833

http://www.slate.com/articles/health_and_science/science/2013/05/weird_psychology_social_science_researchers_rely_too_much_on_western_college.html

Study Design

Overly Honest Methods

Does the needed sample size

grow as the population grows?

Page 43 says no!!!!!!!

Here is a blank copy of Table

2.1 ; try to fill it out with “yes” and “no” entries by reasoning about each

situation.

|

Study Description |

Reasonable to

generalize conclusions about group to population? |

Reasonable to draw

cause-and-effect conclusion? |

|

Observational study

with sample selected at random from population of interest |

||

|

Observational study

based on convenience or voluntary response sample |

||

|

Experiment with groups

formed by random assignment of individuals or objects to experimental

conditions |

(no entry; this row is

just a header for the next 2 rows) |

(no entry) |

|

* Individuals or

objects used in study are volunteers or not randomly selected |

||

|

* individuals or

objects are randomly selected |

||

|

Experiment with groups

not formed by random assignment to experimental conditions |

Rating

System for the Hierarchy of Evidence: Quantitative Questions

Level I: Evidence from a systematic review of all relevant randomized

controlled trials (RCT's), or evidence-based clinical practice guidelines based

on systematic reviews of RCT's

Level II: Evidence obtained from at least one well-designed Randomized

Controlled Trial (RCT)

Level III: Evidence obtained from well-designed controlled trials without

randomization, quasi-experimental

Level IV: Evidence from well-designed case-control and cohort studies

Level V: Evidence from systematic reviews of descriptive and qualitative

studies

Level VI: Evidence from a single descriptive or qualititative study

Level VII: Evidence from the opinion of authorities and/or reports of expert

committees

Above information from "Evidence-based practice in nursing &

healthcare: a guide to best practice" by Bernadette M. Melnyk and Ellen

Fineout-Overholt. 2005, page 10.

Additional information can be found at: www.tnaonline.org/Media/pdf/present/conv-10-l-thompson.pdf

Chapter 2.3: Comparative Experiments

"explanatory" variables are sometimes called

"independent", and

"response"

variables are often called "dependent",

but in later chapters we will learn this can cause confusion.

Blocking: means "putting into groups or blocks", rather than "obstructing".

Blocking activity: email from a friend in the Health school here at EMU:

> Dr. Ross,

> I hope your holiday went well. I have recently completed a project

> identifying some basic variables to be used in preliminary

> evaluation of gait interventions to determine whether the new

> intervention would be worth conducting an in-depth study about. The

> variables include stride length, step width, stride variability, and

> lateral displacement of the total body center of mass. As you can

> see, these variables represent some of the most basic aspects of

> stability, which is what we are always trying to improve or

> maintain, and efficiency.

>

> My question to you is: what sample size e.g. 10 trials, 20 trials, 50

> trials, would we need to take from both a control group (barefoot or

> with regular shoes) and the experimental group (the intervention) in

> order to obtain a confidence level to say that very small changes

> between the two groups is statistically significant? An example

> would be that the average lateral sway during gait was 5 mm less in

> the experimental group form the control group, is that significant

> or not?

Consider the study design here: Evidence Of Racial, Gender Biases Found In

Faculty Mentoring

Or watch

this video and consider how to design a study related to it:

Watch this video, called "Dove: Patches"

* If you were to design a study around this concept, what would your

research question be?

* How would you design the study to answer that question?

Replication

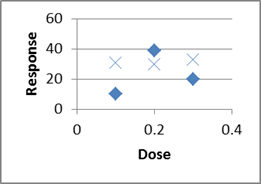

Why do we try to do more than one trial at each level of the explanatory

variable?

Imagine this data set:

What if we had only done one trial at each dose?

Might see just the diamonds, or just the Xs, leading to two completely different ideas of the trend!

And that's just by doing two rather than one at each level!

Replication allows us to quantify the variability/uncertainty at each level.

Also, when designing, choose 3 or more X values, so we can detect nonlinearity.

Controls: Positive and Negative

Bio/Chem: when trying to detect a chemical in a sample (pollution in a lake?),

run your procedures on some known pure water (Negative Control),

and on some water with a known amount of pollutant deliberately added to it

(Positive Control).

Computer Science: when testing spam-filtering software,

run it on some known non-spam ("ham")--negative control,

and on some known spam -- positive control.

What is the difference between placebo and control?

Placebos are meant to fool _people_--usually unnecessary on non-people.

While control experiments apply to people and non-people alike.

But you should still handle animals in the control group the same way (incl.

surgery?)

This article is a humorous take on experimental vs

observational, etc: How To Argue With Research You Don't Like,

http://www.washingtonpost.com/blogs/wonkblog/wp/2013/09/12/how-to-argue-with-research-you-dont-like/

Chapter 3

Guidelines and debate about information visualization: http://eagereyes.org/blog/2012/responses-gelman-unwin-convenient-posting and http://robertgrantstats.wordpress.com/2014/05/16/afterthoughts-on-extreme-scales/

A “Segmented Bar Chart” in

our textbook is the same as a “100% Stacked” chart in Excel. If you change the

widths of the bars to reflect the counts of each bar, that is called a “Mosaic”

chart by most statisticians, or Fathom calls it a “Ribbon” chart. Here are some

thoughts on how to make them in Excel: here and here

http://asq.org/learn-about-quality/data-collection-analysis-tools/overview/histogram2.html

includes some I haven't seen before expressed in

this way:

* Edge peak. The edge peak distribution looks

like the normal distribution except that it has a large peak at one tail.

Usually this is caused by faulty construction of the histogram, with data

lumped together into a group labeled “greater than…”

* comb distribution: In a comb distribution, the

bars are alternately tall and short. This distribution often results from

rounded-off data and/or an incorrectly constructed histogram. For example,

temperature data rounded off to the nearest 0.2 degree would show a comb shape

if the bar width for the histogram were 0.1 degree.

*heart cut distribution: like Normal but with

upper and lower bounds (only selling products that fall in an acceptable range)

*dog food distribution: what's left after a

heart-cut distribution (only selling products on secondary market that fall

outside acceptable range)

Indian

Standardized Test Histogram

A very interesting data

set/set of histograms, which we would expect to have a Normal distribution like

the SAT or ACT, but it's definitely non-normal in very interesting ways:

(you'll need to scroll down past the description of how he collected the data

in a sneaky way):

http://deedy.quora.com/Hacking-into-the-Indian-Education-System

whereas SAT data can be found at

http://research.collegeboard.org/programs/sat/data

http://research.collegeboard.org/content/sat-data-tables

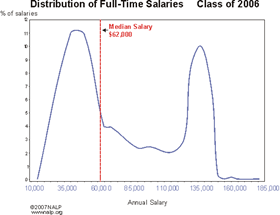

Lawyer starting salaries: (and then 2007 is even worse)

Are adult heights distributed bimodally due to male/female differences?

http://faculty.washington.edu/tamre/IsHumanHeightBimodal.pdf

http://commoncoretools.files.wordpress.com/2012/04/ccss_progression_sp_hs_2012_04_21.pdf

... two ways of comparing height data for males

and females in the 20-29 age group. Both involve plotting the data or data

summaries (box plots or histograms) on the same sale, resulting in what are

called parallel (or side-by-side) box plots and parallel histograms. The

parallel box plots show an obvious difference in the medians and the IQRs for

the two groups; the medians for males and females are, respectively, 71 inches

and 65 inches, while the IQRs are 4 inches and 5 inches. Thus, male heights

center at a higher value but are slightly more variable.

... Heights for males and females have means of

70.4 and 64.7 inches, respectively, and standard deviations of 3.0 inches and 2.6

inches.

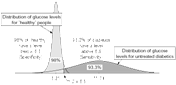

Blood

Sugar Levels [note that the height of the Diabetic peak should be much

smaller in the whole population, like 2% to 5%; this graph is showing two conditional distributions]

Duration of pregnancy has a left-skewed histogram; doi:10.1093/humrep/det297

Birth weight and birth length probably are also left-skewed?

Where the bins start can affect the apparent shape of the

histogram:http://zoonek2.free.fr/UNIX/48_R/03.html

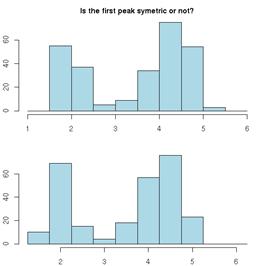

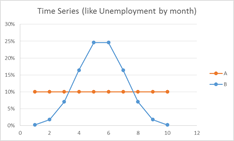

Which histogram below shows more variability, A or B? (adapted from a SCHEMATYC document)

Which time series shows more variability, A or B?

How can we address the mismatch? Focus on understanding and labeling the AXES! (I deliberately didn’t label the axes, above)

Histogram:

x = what values?

y = how many?

Time plot:

x = when?

y = what value?

data sets of quiz scores: which is more variable/harder to

predict?

data set 1: 1 2 3 4 5 6 7 8 9 10; histogram looks flat

data set 2: 8 8 8 8 8 8 8 8 8 8 ; histogram has a spike

Other sample histograms from something I created for my Math

110 class. Here's the link:

http://people.emich.edu/aross15/math110/m110-coursepack-supplement-v6.docx

and just search inside the file for Histogram.

An alternative to a histogram is a Frequency Polygon; these tend to be better when showing two or more histograms on the same graph

Heart Rate Survey Data with Relative Ranks:

https://tuvalabs.com/mydatasets/d24bae4915ee4ad99a99b263f7a7143d/

Classification of Chart Types

http://www.excelcharts.com/blog/classification-chart-types/

-----------------------------------

Melanie Tory and Torsten Moller’s InfoVis 2004

paper “Rethinking Visualization: A High-Level Taxonomy” (search for it at

scholar.google)

http://webhome.cs.uvic.ca/~mtory/publications/infovis04.pdf

------------------------------------------------

Choosing a Good Chart

http://extremepresentation.typepad.com/blog/2006/09/choosing_a_good.html

------------------------------------------------

a task by data type taxonomy for information

visualization.

----------------

don't use rainbow colors for sequential data

values:

http://www.wired.com/2014/10/cindy-brewer-map-design/

http://datascience.ucdavis.edu/NSFWorkshops/Visualization/GraphicsPartI.pdf

http://www.poynter.org/uncategorized/224413/why-rainbow-colors-arent-always-the-best-options-for-data-visualizations/

-----------------------------------------------------

a really good overview:

http://datascience.ucdavis.edu/NSFWorkshops/Visualization/

-----------------------------------------------------

Statistical Graphics for Univariate and

Bivariate Data

William G. Jacoby

Quantitative Applications in the Social

Sciences, #117

A Sage University Paper

page 11

from most accurate to least accurate:

A. Position along a common scale

B. Position along common, nonaligned scales

C. Length

D. Angle* [perceptual judgments abou tangles and

slopes/directions are carried out with equal accuracy, so their relative

ordering in this figure is arbitrary]

E. Slope, Direction* [see above]

F. Area

G. Volume

H. Fill density, Color Saturation

page 23, regarding kernel density estimates:

"In statistical terminology, narrow

bandwidths produce low-bias, high-variance histograms; the density trace

follows the data very closely (low bias), but the smooth curve jumps around

quite a bit (high variance). Larger bandwidths are high bias and low variance

because they produce a smooth density trace (low variance in the plotted

values) but depart from the actual data to a more substantial degree (high bias

in the graphical representation)."

[they must mean variance in the vertical height]

page 52, Construction Guidelines for Bivariate

Scatterplots

mostly from Cleveland 1994

"/Make sure that the plotting symbols are

visually prominent and relatively resistant to overplotting effects/. ... For

example, small plotting symbols are easily overlooked. At the same time, larger

filled symbols make it quite difficult to distinguish overlapping data points.

For these reasons, open circles make good general-purpose plotting symbols for

bivariate scatterplots.

"/Rectangular grid lines usually are

unnecessary within the scale rectangle of a scatterplot/

"/The data rectangle should be slightly

smaller than the scale rectangle of the scatterplot/. Otherwise, it is likely

that some data will be hidden as a result of intersections between points and

the scale lines....

"/Tick marks should point outward, rather

than inward, from the scale lines/. ... further reduces the possibility of

collisions between data points and other elements of the scatter diagram...

and then a few other, less important.

page 85, Aspect ratio and Banking to 45 degrees

https://eagereyes.org/basics/banking-45-degrees

actually argues that the original study didn't

test other possibilities:

"While Cleveland et al. assumed the

precision of value judgments would again decrease when going below 45º, they

did not present actual data to show that.

When testing lower average slopes, Talbot et al.

found that people actually got better with shallower slopes. The 45º were an

artifact of the study design."

Also see:

https://eagereyes.org/section/seminal-papers

-----------------------------------------------------

Chapter 4

A puzzle to start class:

1) What value of x minimizes (x-1)^2 + (x-3)^2 +

(x-8)^2 ?

2) What value of x minimizes abs(x-1) + abs(x-3)

+ abs(x-8) ?

(while you could use calculus on #1, you can't

on #2, so I suggest just graphing both of them)

Doing Data Science, page 270: “the average person on Twitter is a woman with 250 followers, but the median person has 0 followers”

Mythbusters on Standard Deviation: testing different soccer-ball launchers, looking for consistency (dig the spreadsheet out of my email?)

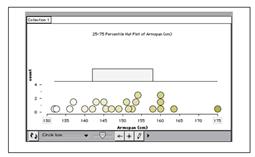

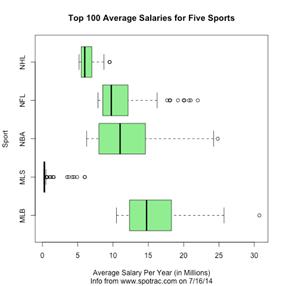

Boxplots: An interesting comparative boxplot on what

different hospitals charge for different blood tests: Variation

in charges for 10 common blood tests in California hospitals: a cross-sectional

analysis by Renee Y Hsia1, Yaa Akosa

Antwi2, Julia P Nath

Figure 1: Variation in charges for 10 common

blood tests in California (CBC, complete blood cell count; ck, creatine kinase;

WCC, white cell count). Central lines represent median charges, boxes represent

the IQR of charges, and whiskers show the 5th and 95th centile of charges for

each of the 10 common blood tests.

Boxplots: variability in salaries for top 100 athletes in various US sports, from Jeff Eicher via the AP-Stats community:

Transitioning from Dotplots to Boxplots: Hat Plots, a math-education-specific way to make the transition; shown in The Role of Writing Prompts in a Statistical Knowledge for Teaching Course by R.E. Groth (draft saved in email); Tinkerplots can do them.

Old Class Data

Here is the data that you entered this semester on your own

height, in inches above 5-foot-0-inches.

We will use this in class.

Not to spoil the fun, but we will:

* make a histogram to see its general shape, in bins of width 2 inches

(use the histogram template file we've been using)

* compute the mean & SD

* compute another histogram with bin widths of 1 SD, centered on the mean

* look at the % of data points within +/- 1 SD of the mean, and then +/- 2 SD

of the mean, and +/- 3 SD.

6

10.5

9

7

10

8

12

0

8

10

5

2

6

10

9.5

4 in

16.5

17

6

9

13

6

11

9

7.75

6

15

2

0.4

4 inches

6

5

14.5

12

3

5-foot-6-inches

4

11

10

11

4.75

6

12

7

8

9

12.5

8

And here's another data set, on how long it took students in one of my

Math 110 classes to walk from Green Lot to Pray-Harrold, in decimal minutes:

9.4166666667

8.35

9.6

10.4666666667

10.5333333333

12.15

7.7666666667

8.1666666667

6.3666666667

6.3666666667

0.1334166667

8.2166666667

7.25

7.3333333333

22.7833333333

7.55

6.5833333333

4.45

7.1

3.7166666667

5.7166666667

11.9833333333

6.2666666667

8.6333333333

5.7

7.25

7.9666666667

7.8833333333

6.766666666

Algorithms for Computing the Mean and the Variance

Surprisingly,

it can be hard to compute the mean, and especially the variance, if there is a

huge amount of data, or if roundoff error is an issue. Computer science people

might want to read:

http://cpsc.yale.edu/sites/default/files/files/tr222.pdf

equivalent to

Chan, Tony F.; Golub, Gene H.; LeVeque, Randall

J. (1983). Algorithms for Computing the Sample Variance: Analysis and

Recommendations. The American Statistician 37, 242-247. http://www.jstor.org/stable/2683386

Also:

http://en.wikipedia.org/wiki/Algorithms_for_calculating_variance

Chapter 5

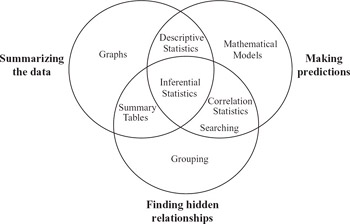

Is the main purpose of regression to make predictions? The book “Making Sense of Data, Volume I” says that statistics is for: making predictions, finding hidden relationships, and summarizing the data:

Here is another take on it: To Explain or to Predict? by Galit Shmueli

Forecasting time series data is an important statistics

topic that we don’t have time to do in detail. Here is a freely available

textbook chapter about it: “Chapter 16: Time Series and Forecasting”

http://highered.mcgraw-hill.com/sites/dl/free/0070951640/354829/lind51640_ch16.pdf

There are more types of regression than what we’ll learn

about. See 10 types of regressions.

Which one to use?

For future teachers: Unofficial TI-84

regression manual, or another site. It

mentions that you need to turn on the Calculator Diagnostics to get the r and

r^2 values. Do that by doing [2nd] [Catalog] [D] [Diagnostic On]

[Enter]; you should only have to do that once in the lifetime of the calculator

(unless you do a full-reset?)

And, it’s important to be able to compute and plot residuals.

Here are

instructions for doing it on a TI-84.

also should include:

If you accidentally delete List1, then do this:

Stat -> Stat Editor -> (enter) ->

(enter)

Here's an animation of what it means to have a bell-curve

distribution of the residuals:

http://screencast.com/t/w7YOge93Nj

This could help in understanding the first few

sheets on the pre-work for classtime

Example Plots

Here is an example

heteroscedastic scatter plot: x=income, y=Expenditure on food, both

in multiples of their respective mean; this is UK data on individuals, from

1968-1983

![\includegraphics[scale=0.7]{ANRfoodscat.ps}](m360-supplement-2015-fall_files/image023.jpg)

Here is some data on

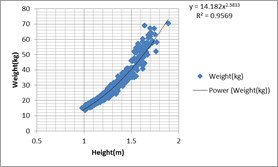

school-age children in the US, height and weight, that also shows heterskedasticity:

data from http://www.nal.usda.gov/fnic/DRI/DRI_Energy/energy_full_report.pdf

There are various tests for heteroscedasticty:http://en.wikipedia.org/wiki/Breusch%E2%80%93Pagan_test

An example Matrix of Scatterplots, from Statistical Methods in Psychology Journals:

It’s data from a national survey of 3000 counseling clients (Chartrand 1997); on the diagonal are dotplots of the individual variables, and off the diagonal there are scatterplots of pairs of variables. “Together” is how many years they’ve been together in their current relationship. What do you see in these plots?

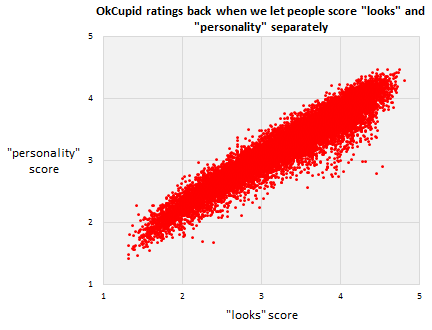

Here’s a fun/depressing scatterplot, from OK Cupid: http://blog.okcupid.com/index.php/we-experiment-on-human-beings/

And then [in the following paragraph, what does the “less than 10%” mean, in terms of statistical things like slope, intercept, correlation coefficient, R^2, etc?]

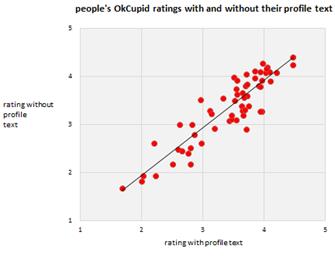

After we got rid of the two

scales, and replaced it with just one, we ran a direct experiment

to confirm our hunch—that people just look at the picture. We took a small

sample of users and half the time we showed them, we hid their profile text.

That generated two independent sets of scores for each profile, one

score for “the

picture and the text together” and one for “the picture alone.” Here’s how

they compare. Again, each dot is a user. Essentially, the text is less than 10%

of what people think of you.

Correlation and Causation

Doing Data Science, page 26:

Say you decided to compare women and men with the exact same

qualifications that have been hired in the past, but then, looking into what

happened next you learn that those women have tended to leave more often, get

promoted less often, and give more negative feedback on their environments when

compared to the men. Your model might be likely to hire the man over the woman

next time

the two similar candidates showed up, rather

than looking into the possibility that the company doesn’t treat female

employees well. In other words, ignoring causation can be a flaw, rather than a

feature. Models that ignore causation can add to historical problems instead of

addressing them.... And data doesn’t speak for itself. Data is just a

quantitative, pale echo of the events of our society

Also see the fantastical claims in “The End of Theory: The Data

Deluge Makes the Scientific Method Obsolete” Chris Anderson, Wired, 2008

And, Statistical Truisms in the Age of Big Data by Kirk Borne

Can this data tell us

anything?

http://www.deathpenaltyinfo.org/murder-rates-nationally-and-state#MRalpha

Logarithms

Grab data for

infant mortality vs. gdp-per-capita from my email box?

Starbucks data: http://textbookequity.org/oct/Textbooks/Lippman_mathinsociety.pdf

Year Number of Starbucks stores

1990 84

1991 116

1992 165

1993 272

1994 425

1995 677

1996 1015

1997 1412

1998 1886

1999 2498

2000 3501

2001 4709

2002 5886

2003 7225

2004 8569

2005 10241

2006 12440

2007 15756

http://www.starbucks.com/aboutus/Company_Timeline.pdf retrieved May 2009

Like Moore’s law, but for LEDs: http://en.wikipedia.org/wiki/Haitz's_law

Range of human

hearing and range of human vision:

http://eagereyes.org/blog/2012/values-worth-chart

Double-Log

scales:

http://robertgrantstats.wordpress.com/2014/05/16/afterthoughts-on-extreme-scales/

http://en.wikipedia.org/wiki/Graphical_timeline_from_Big_Bang_to_Heat_Death

Regression to the Mean

http://en.wikipedia.org/wiki/Regression_to_the_mean

The Rhine

Paradox, about testing for ESP

http://support.sas.com/resources/papers/proceedings10/271-2010.pdf

http://onlinestatbook.com/stat_sim/reg_to_mean/

http://books.google.com/books?id=NcFOwRCwDOQC&lpg=PA252&ots=QJJ87auCoA&dq=%22regression%20toward%20the%20mean%22%20data%20set&pg=PA251#v=onepage&q=%22regression%20toward%20the%20mean%22%20data%20set&f=false

http://isites.harvard.edu/fs/docs/icb.topic469678.files/regress_to_mean1.pdf

http://codeandmath.wordpress.com/2012/11/21/regression-to-the-mean/

http://www.stat.berkeley.edu/~bradluen/stat2/lecture12.pdf

This page points out the problem of doing repeated tests as the sample

size grows--even if H0 is true, the P value will wander between 0 and 1

randomly, and if you decide to stop when it hits 0.05 you're doing something

bad: http://www.refsmmat.com/statistics/regression.html

Why best cannot last: Cultural differences in predicting regression

toward the mean

http://onlinelibrary.wiley.com/doi/10.1111/j.1467-839X.2010.01310.x/abstract

Roy R. Spina, Li-Jun Ji, Michael Ross, Ye Li, Zhiyong Zhang

Article first published online: 16 AUG 2010; DOI:

10.1111/j.1467-839X.2010.01310.x

Keywords: culture; lay theories of change; prediction; regression toward the

mean

Four studies were conducted to investigate cultural differences in predicting

and understanding regression toward the mean. We demonstrated, with tasks in

such domains as athletic competition, health and weather, that Chinese are more

likely than Canadians to make predictions that are consistent with regression

toward the mean. In addition, Chinese are more likely than Canadians to choose

a regression-consistent explanation to account for regression toward the mean.

The findings are consistent with cultural differences in lay theories about how

people, objects and events develop over time.

Home Run Derby. There

is a popular view that players who participate in the Home Run Derby somehow

"hurt their swing" and do worse in the second half of the season.

This article talks about how this phenomenon can be accounted for by regression

to the mean.

http://fivethirtyeight.com/datalab/the-home-run-derby-myth/

Ecological Fallacy

http://en.wikipedia.org/wiki/Ecological_fallacy

http://www.jerrydallal.com/LHSP/corr.htm

try google images for:

ecological fallacy

http://ehp.niehs.nih.gov/1103768/

Three Criteria for Ecological Fallacy

Alvaro J. Idrovo

mentions/has a diagram for

ecological fallacy

atomistic fallacy

sociologistic fallacy

psychologistic fallacy

Interpreting

the Intercept

Interpreting the Intercept in a

Regression Model

http://www.theanalysisfactor.com/interpreting-the-intercept-in-a-regression-model/

and the more advanced “How to Interpret the

Intercept in 6 Linear Regression Examples”

http://www.theanalysisfactor.com/interpret-the-intercept/

Interpreting the Slope

http://blog.mathed.net/2012/07/settling-slope-and-constructive-khan.html

Working on her dissertation in the mid-1990s,

Sheryl Stump (now the Department Chairperson and a Professor of Mathematical

Sciences at Ball State University) did some of the best work to date about how

we define and conceive of slope. Stump (1999) found seven ways to interpret

slope, including: (1) Geometric ratio, such as "rise over run" on a

graph; (2) Algebraic ratio, such as "change in y over change in x";

(3) Physical property, referring to steepness; (4) Functional property,

referring to the rate of change between two variables; (5) Parametric

coefficient, referring to the "m" in the common equation for a line

y=mx+b; (6) Trigonometric, as in the tangent of the angle of inclination; and

finally (7) a Calculus conception, as in a derivative.

[note that none of these correspond

to how we view slope in statistics!]

Thinking about how to measure R^2:

eight criteria for a good R2, mentioned at http://statisticalhorizons.com/r2logistic

Kvalseth, T.O. (1985) “Cautionary note about

R2.” The American Statistician: 39: 279-285

Chapter 6

Activity idea: Determine the

sensitivity and specificity of the Cinderella shoe-fitting method. You will

have to make some assumptions.

How can you analyze this study?

http://www.healthline.com/health-news/children-autism-risk-appears-early-in-the-placenta-042513

Autism Risk Detected at Birth in Abnormal

Placentas

Written by Julia Haskins | Published April 25,

2013

Some good

sensitivity/specificity examples at http://onlinelibrary.wiley.com/enhanced/doi/10.1111/1467-9639.00076/

More stuff about

sensitivity and specificity:

http://www.npr.org/templates/transcript/transcript.php?storyId=407978049

http://www.npr.org/sections/money/2015/05/20/407978049/how-a-machine-learned-to-spot-depression

http://www.theatlantic.com/technology/archive/2014/05/would-you-want-therapy-from-a-computerized-psychologist/371552/

http://ict.usc.edu/prototypes/simsensei/

Chapter 6.7:

Estimating Probabilities Empirically Using Simulation

http://commoncoretools.files.wordpress.com/2011/12/ccss_progression_sp_68_2011_12_26_bis.pdf

students should experience setting up a model

and using simulation (by hand or with technology) to collect data and estimate

probabilities for a real situation that is sufficiently complex that the theoretical probabilities are not

obvious. For example, suppose, over many years of records, a river generates a

spring flood about 40% of the time. Based on these records, what is the

chance that it will flood for at least three years in a row sometime

during the next five years? 7.SP.8c

7.SP.8c Find probabilities of compound events

using organized lists, tables, tree diagrams, and simulation.

c Design and use a simulation to generate

frequencies for compound events.

Chapter 7

We might also have a quick quiz in class about how shifting

or scaling affects the mean, variance, SD, IQR, etc., and the proper formula

for the sample variance.

In class, we used the dotplot-histogram-crf-1000 sheet to investigate questions

like:

* Is E[X+Y] = E[X] + E[Y] ? (yes, it always is--doesn't even need

independence!)

* Is Std(X+Y) = Std(X) + Std(Y) ? (no, it basically never is!)

* Is Var(X+Y) = Var(X) + Var(Y) ? (in Excel it was close enough; with infinite

trials, it's exactly true,